Final exams

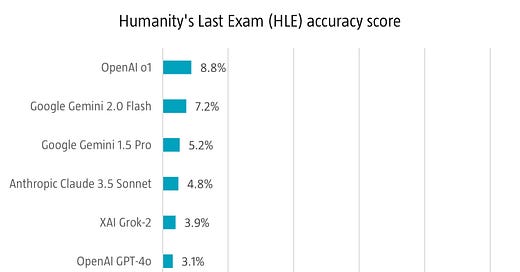

Like with human students, artificial intelligence models are routinely scored against a barrage of tests to measure progress. For many years, Imagenet, which required AI models to identify objects shown in photographs, was the leading benchmark for measuring AI progress. Overtime, the majority of AI models were able to score 90% or higher on the Imagenet accuracy test. Image recognition became a solved problem. Subsequent tests like the Multitask Language Understanding (MMLU) and Level 5 Math exams have also been largely solved by leading AI models. This year, for the first time, the Stanford University Human AI Index report included newer tests like the ARC Foundation’s measure of Artificial General Intelligence (AGI) and another very challenging new test called Humanity’s Last Exam (HLE). The developers of the HLE exam included submissions from academics across disciplines from ancient Greek history to quantum physics. To date, AI has failed the exam with OpenAI’s o1 model earning the highest mark at just 8.8%. Please also see our recent note Tracking AI in 10 charts.

Source: Stamford HAI 2025 Index , April 2025